This post was transcribed from imitone’s kickstarter page. click here for the original post, or click here for an audio version of this post.

I’m calibrating! I’m calibrating!! I’m fine-tuning a training model, generating and analyzing billions of datapoints, calculating probabilities and building a time-critical pitch tracker capable of — ah — ah, wait, I shouldn’t get ahead of myself.

I’ve been busy. Following the plan from our last update, I have devoted most of this year to research and development on the next generation of imitone’s technology — probably the most advanced pitch tracker in the world. A few weeks from now, it will be in your hands as part of imitone beta 0.10.

Most exciting of all, it will be evolving.

As imitone’s new “brain” develops, it will respond quicker, cleaner and more accurately to a wider range of voices and singing styles. It will work in noisier spaces. It will consume less CPU power — a big consideration for our mobile apps. Finally, it will lay the foundation for important features like automatic scale detection, automatic pitch range and the intelligent note selection described in our last update.

It has taken a long time and a lot of work, but this is a huge step toward the dream.

The coming update will bring a few of those benefits, and will be followed by several smaller updates with gradual improvements. Next week, we’ll show you a preview of the new version and our plans for 2020.

For now, read on to learn about what we’ve been doing in 2019, and how we’re building the new imitone.

Evan’s 2019

Over the last year I’ve traveled across the USA in a motor home while continuing my research.

January: Finished the first version of our custom machine learning tool, the “calibrator”. Showed imitone at NAMM 2019 with the MIDI Manufacturers Association.

February: Contributed to pitch-related features in MIDI 2.0. Made plans for 2019 research, development and marketing. Shot tutorial videos with a new team member.

March: Contributed to MIDI 2.0 Property Exchange. Designed a secret planned feature. Made another tutorial. Developed marketing plans. Attended GDC.

April: Lived in the Mojave desert. Resumed work on the calibrator, training synthesizer, theory of pitch tracking and the ability to run imitone’s machine learning on cloud computers. Made social media plans.

May: Lived on solar power in the mountains of California. Tested several experimental SAHIR designs. Developed mathematical techniques for high-fidelity filtering.

June: Visited with computational creativity experts in Santa Cruz. Integrated the new filtering methods into imitone’s technology. Used attractor theory to improve imitone’s learning system.

July: Investigated and fixed several design flaws in the new SAHIR. Started running imitone’s machine learning on cloud computers. Visited Crater Lake in Oregon.

August: Further improved the training tools and the ability to run them in the cloud. Made a significant improvement to the SAHIR’s pitch-following.

September: Developed a CPU budgeting algorithm for SoundSelf, which might be used in imitone too. Interviewed potential additions to the imitone team.

October: Had an early holiday with family and caught the flu. Planned out the 0.10 update. Investigated the new SAHIR’s CPU usage. Made a few “recipe” videos showing imitone techniques.

November: Made a pipeline for using the training data in the live SAHIR. Began testing and tuning the new tech for real-world use. Worked on 0.10’s interface design. Developed a new approach to vibrato tracking and pitch-guide.

December: Getting the new technology ready for action and putting together imitone 0.10!

Taking imitone to the Next Level

My focus with Phase 4 has been on unlocking the potential of imitone’s pitch tracking. To do this, I’m adding a layer of machine learning to imitone’s original technology, and building a collection of tools to help me oversee the process.

The Fundamentals

Let’s start with a quick summary of how imitone has always worked.

If you sing beneath a concrete tunnel, into a plastic pipe or onto the strings of a guitar, these things will “resonate” with the tone of your voice. If you sing at just the right pitch, the resonance will be loud, bright and long-lasting. Otherwise, it will be quiet and dull.

Each string on a guitar has a specific pitch. If you sing a note that falls between those pitches, the strings won’t resonate. imitone’s early prototypes worked like hundreds of guitar strings — so that any pitch you could sing would resonate one of them. However, there was a problem: if the pitch of your voice is changing, it will only match up with each string for a moment — and none of them will resonate strongly. In practice, voice pitch wobbles and slides a lot.

The piece at the heart of imitone is the “self-attuning resonator”, or SAHIR (“say-here”).

The SAHIR resonates with your pitch, but also follows it — as if someone is tuning the guitar really fast to match your voice. When the SAHIR rings loud and bright, it has locked onto your pitch — and will track it faithfully. Because they’re flexible and precise, a handful of SAHIR work better than hundreds of simple resonators.

The original SAHIR uses special measurements to do its two jobs:

- Attune: change pitch to lock onto and follow your voice.

- Detect: determine whether the SAHIR is locked onto a tone.

Using these measurements and a little bit of fudge factor, we have managed to make one of the most responsive pitch trackers in the world. And yet… as we know, imitone 0.9 had room to improve. Each measurement is based on a simplified model of the human voice, and has a little bit of uncertainty to it. Add some background noise, or some growl, or a sound that doesn’t match up with our model, and the uncertainty grows, with messy results…

The Evolution

…And so, it became clear that the key was in statistics: the science of uncertainty. Applied statistics leads us to machine learning, where we can create knowledge from examples. I designed a completely new SAHIR — one that could eventually be trained to do the best job possible.

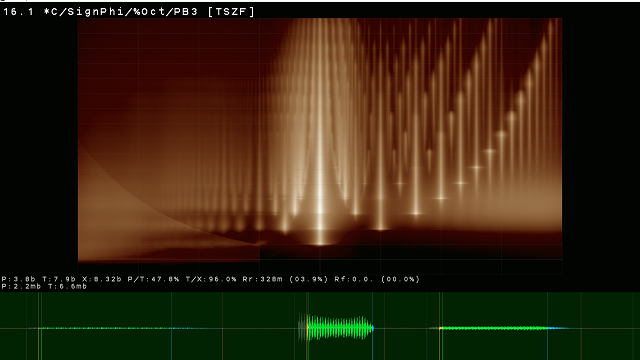

I began studying how the SAHIR’s measurements behave with different sounds. Dozens of sounds, then thousands… then millions, billions. I built a new tool called the “calibrator” to run these musical exeriments, plotting the SAHIR’s measurements against the correct answers.

Originally I expected this would be pretty simple: chart the measurements against some example sounds, transform those charts into statistical likelihoods and use those as calibrations in a fine-tuned pitch tracker. The reality has been a little more complicated… Who could have guessed?

The first problem I ran into was… more like an opportunity. My new tool allows me to design plots comparing different measurements in different situations. I made dozens of these, and each gave me a new perspective on billions of datapoints. They gave me insight into behaviors of the SAHIR that were invisible with the tools I had before: Situations where it would get stuck, jitter, or fail to recognize a tone… I spent several weeks investigating newfound flaws and opportunities for improvement.

Playing Fair

The second problem is an inevitability with machine learning. To teach imitone to recognize human voices, I need lots and lots of examples and counter-examples. I need to be very careful about how I choose these examples, because they create bias — and imitone needs to be ready for all the different voices it might encounter in the real world.

I tested the original imitone constantly with my own voice, fine-tuning it to feel good when I sing at it. Because of that testing, it works well with my voice, and voices like mine. I’ve learned that there are other kinds of singing voices — even other kinds of vocal cords — which imitone doesn’t understand so well. Therefore, we can say that imitone has been biased toward my singing voice (a “modal baritone”) and against certain others (like those of heavy metal singers).

Many other sensitive technologies also have a habit of working a little better for people who look, sound or act like their creators. Cameras designed to recognize people may behave differently based on ethnicity. Airport security scanners regularly cause trouble for people whose body shape isn’t typical for their gender. Similarly, imitone has had its own biases toward certain singing voices and the western canon of music.

To work against voice bias and build a truly “universal” music tool, I’ll be training imitone against an especially diverse collection of sounds. For now, I’m creating billions of artificial voice sounds which vary more widely than human voices do. If I use real voice to train imitone in the future, I will collect the most diverse collection of voices I can. Finally, future versions of imitone might be able to learn the unique nature of your voice while you sing — but we aren’t there quite yet.

I’ll be working against imitone’s western bias by introducing more traditions of music, such as the Indonesian and Arabic scales mentioned in our last update. (I’m interested in talking to experts in traditions around the world.) Our fifth and final phase of research will revolve around music theory and the way imitone chooses notes. That will result in big changes to imitone’s scales, keys, tuning and pitch correction.

What’s Next?

I’ll be spending the next few weeks fine-tuning the new SAHIR and making some other much-needed improvements to imitone for a winter update. Once it’s in your hands, the team will be collecting as much feedback as we can, continuing to fine-tune and fixing any bugs that have crept in over the last two years.

imitone will be exhibiting at NAMM 2020 with the MIDI Manufacturers’ Association. If you plan to attend, don’t be a stranger! I also suggest keeping an eye out for announcements about MIDI 2.0 — I’ll talk about those in a future post.

For the new year, I’m resolving to be less of a hermit. No, that doesn’t mean I’ll quit living in the the woods — but that I’ll be making efforts to be more transparent, more communicative and more active in updating the software. To get there, I’ll be adding some new faces to the imitone team. You might get to meet them in a future article (or at NAMM). If you feel you might have something to bring to the project, shoot us an E-mail. We’re looking to organize, research, teach, design and build.

Thanks for sticking with me on this long ride.

Happy trails from the I.W.S. Coddiwomple,

— Evan